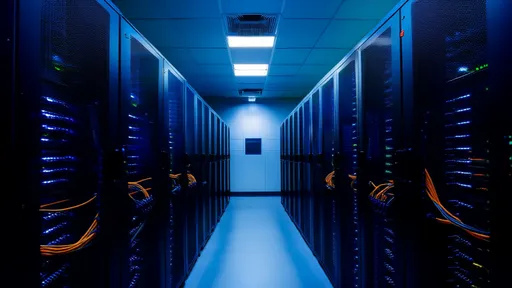

The rapid expansion of edge computing infrastructure has brought unprecedented challenges in thermal management, pushing engineers and researchers to develop innovative cooling solutions. As edge nodes proliferate in harsh environments—from factory floors to desert oil fields—traditional air-cooling methods often prove inadequate. This technological evolution isn't just about preventing overheating; it's becoming a critical factor in determining computational performance, hardware longevity, and energy efficiency across distributed networks.

Liquid Cooling Breakthroughs for Compact Edge Deployments

Recent advancements in direct-to-chip liquid cooling have revolutionized thermal management for edge servers. Unlike data center implementations that require elaborate plumbing, new microfluidic systems integrate seamlessly into ruggedized edge enclosures. Engineers at several major OEMs have developed self-contained cold plates that circulate dielectric coolant through microchannels etched directly into processor lids. These systems achieve 40-50% better heat transfer than conventional heat sinks while consuming minimal additional power.

What makes these solutions particularly groundbreaking is their passive operation in many edge scenarios. By leveraging the phase-change properties of advanced refrigerants, some next-generation cooling units can operate pump-free below certain thermal thresholds. This reliability boost is crucial for remote installations where maintenance intervals must be extended. Field tests by telecommunications providers show these liquid-cooled edge nodes maintaining optimal temperatures during peak loads while reducing cooling-related energy consumption by up to 60% compared to forced-air alternatives.

Phase-Change Materials Reshape Thermal Buffering

Another transformative approach emerging from materials science labs involves embedded phase-change materials (PCMs) within edge computing hardware. These proprietary wax-based compounds absorb excess heat by melting at precisely calibrated temperatures, then gradually release thermal energy as they resolidify during low-utilization periods. Early adopters in the industrial IoT sector report dramatically reduced temperature spikes during intermittent high-load operations typical of predictive maintenance applications.

The implementation sophistication has reached new levels with microencapsulated PCMs being woven into circuit board substrates themselves. This architectural integration provides localized thermal regulation exactly where transient hot spots occur—near GPUs accelerating machine learning workloads or near memory modules handling real-time analytics. Perhaps most impressively, these solutions add negligible weight while eliminating the need for active cooling components in many moderate-climate deployments.

Artificial Intelligence Meets Thermal Optimization

Perhaps the most disruptive innovation comes from applying machine learning to dynamic cooling control. Modern edge management software now incorporates real-time thermal modeling that predicts heat accumulation patterns based on workload characteristics, ambient conditions, and equipment aging factors. These AI-driven systems don't just react to temperature sensors—they proactively adjust fan speeds, workload distribution, and even processor clock rates to maintain optimal thermal margins.

Several tier-1 automotive manufacturers have implemented such systems in their roadside edge computing units for autonomous vehicle support. By analyzing historical thermal data across thousands of nodes, their algorithms have learned to anticipate cooling needs based on traffic patterns, weather forecasts, and even the orientation of equipment cabinets relative to sunlight. The results include 30% longer component lifespans and the ability to safely overclock processors during critical operations without risking thermal throttling.

Renewable Thermal Energy Harvesting Emerges

Forward-thinking engineers are transforming the thermal challenge into an energy opportunity. Experimental edge installations now demonstrate the viability of converting waste heat into supplementary power through compact thermoelectric generators. While current conversion efficiencies remain modest (typically 5-8%), the recovered energy proves sufficient to power onboard monitoring systems or extend battery life for backup power supplies.

More remarkably, some Arctic deployments have begun using the temperature differential between internally generated heat and frigid environments to create self-sustaining power systems. These implementations combine advanced thermoelectric materials with vacuum-insulated enclosures that carefully regulate heat dissipation rates. The approach not only solves cooling requirements but actually turns thermal management into a net energy producer during extreme cold weather conditions.

The ongoing revolution in edge cooling technologies reflects the computing paradigm's unique constraints and opportunities. As 5G networks densify and latency-sensitive applications proliferate, thermal innovation will remain a critical enabler for pushing computational capabilities to the network's edge. The solutions emerging today—whether based on advanced materials, intelligent control systems, or energy-recycling approaches—are collectively redefining what's possible in distributed computing infrastructure.

By /Jul 11, 2025

By /Jul 11, 2025

By /Jul 11, 2025

By /Jul 11, 2025

By /Jul 11, 2025

By /Jul 11, 2025

By /Jul 11, 2025

By /Jul 11, 2025

By /Jul 11, 2025

By /Jul 11, 2025

By /Jul 11, 2025

By /Jul 11, 2025

By /Jul 11, 2025

By /Jul 11, 2025

By /Jul 11, 2025

By /Jul 11, 2025

By /Jul 11, 2025

By /Jul 11, 2025