The automotive industry is undergoing a profound transformation, driven by the rapid integration of advanced technologies into vehicle cabins. Among these innovations, multimodal interaction stands out as a game-changer, redefining how drivers and passengers engage with their vehicles. By seamlessly combining voice, touch, gesture, and even gaze recognition, modern smart cabins are creating intuitive and immersive experiences that prioritize safety, convenience, and personalization.

At the heart of this evolution lies the convergence of multiple sensory inputs. Gone are the days when drivers had to fumble with buttons or navigate complex menus while on the road. Today's systems can interpret natural speech patterns, respond to hand waves, or even detect where a passenger is looking. This holistic approach to human-machine interaction doesn't just reduce distractions—it anticipates user needs before they're explicitly stated.

Automakers are partnering with tech giants and specialized startups to push the boundaries of what's possible. The latest cockpit designs incorporate arrays of microphones, high-resolution cameras, and advanced haptic feedback surfaces. These components work in concert to create a responsive environment where commands can be issued and executed through the most natural means available at any given moment. For instance, a driver might adjust the temperature with a voice command while simultaneously zooming the navigation map with a pinch gesture.

What makes multimodal systems particularly powerful is their contextual awareness. Advanced artificial intelligence algorithms analyze the cabin environment in real-time, determining which interaction mode makes the most sense based on driving conditions, user preferences, and even emotional states detected through biometric sensors. This intelligence allows the system to prioritize critical alerts during high-speed maneuvers while enabling more relaxed interactions during casual cruising.

The implications for safety cannot be overstated. Research shows that multimodal interfaces can reduce cognitive load by up to 40% compared to traditional touchscreen-heavy designs. When drivers can choose their preferred interaction method—or when the system intelligently suggests the optimal one—their attention remains focused where it belongs: on the road. This becomes especially crucial as vehicles incorporate more complex infotainment and connectivity features that could otherwise overwhelm users.

From a design perspective, implementing effective multimodal systems requires careful consideration of human factors. Engineers must account for cultural differences in gesture recognition, regional speech patterns for voice control, and ergonomic principles for touch interfaces. The most successful implementations create a unified interaction language that feels consistent across all modalities, avoiding the cognitive dissonance that can occur when different systems respond differently to similar intents.

Looking ahead, the next frontier involves incorporating more sophisticated biometric and environmental data. Future smart cabins might adjust lighting and climate based on detected stress levels, or suggest rest stops when sensors indicate driver fatigue. The vehicle could even learn individual occupants' preferences over time, automatically adjusting seat positions and entertainment options as soon as it recognizes who's entered the car.

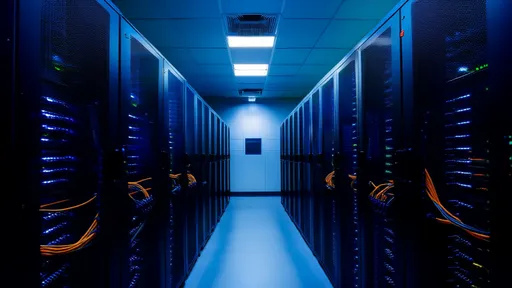

As these technologies mature, standardization will become increasingly important. The industry needs common protocols to ensure interoperability between different manufacturers' systems and third-party applications. This challenge presents both technical and regulatory hurdles, but the potential payoff—a truly seamless, intelligent mobility experience—makes the effort worthwhile.

The integration of multimodal interaction in smart cabins represents more than just technological progress; it signals a fundamental shift in how we conceptualize the relationship between humans and vehicles. No longer mere transportation tools, cars are evolving into responsive environments that understand and adapt to their occupants' needs through multiple channels of communication. This transformation will accelerate as 5G connectivity, edge computing, and advanced AI algorithms continue to mature, paving the way for even more sophisticated interactions in the vehicles of tomorrow.

By /Jul 11, 2025

By /Jul 11, 2025

By /Jul 11, 2025

By /Jul 11, 2025

By /Jul 11, 2025

By /Jul 11, 2025

By /Jul 11, 2025

By /Jul 11, 2025

By /Jul 11, 2025

By /Jul 11, 2025

By /Jul 11, 2025

By /Jul 11, 2025

By /Jul 11, 2025

By /Jul 11, 2025

By /Jul 11, 2025

By /Jul 11, 2025

By /Jul 11, 2025

By /Jul 11, 2025